|

|

|

I'm interested in combining research on computer vision, computer graphics and machine learning to understand human motion. Much of my research is on hand and object tracking from images. |

|

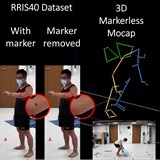

Prayook Jatesiktat, Guan Ming Lim, Wee Sen Lim, Wei Tech Ang JBHI, 2024 project page The RRIS40 dataset uses anatomical landmarks from marker-based motion capture combined with deep learning techniques to achieve accurate 3D markerless human motion capture. |

|

Guan Ming Lim, Prayook Jatesiktat, Wei Tech Ang EMBC, 2023 project page Real-time, accurate, and robust tracking of a rigid object using image-based methods such as efficient projective point correspondence and precomputed spare viewpoint information. |

|

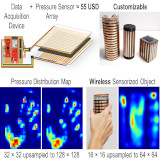

Guan Ming Lim, Prayook Jatesiktat, Christopher Wee Keong Kuah, Wei Tech Ang EMBC, 2023 project page A low-cost, modular, and wireless pressure sensor array that can generate real-time pressure distribution map for object pose estimation and grasp classification. |

|

Guan Ming Lim, Prayook Jatesiktat, Wei Tech Ang ICONIP, 2020 project page / code / video Real-time estimation of 3D hand shape and pose from a single RGB image running at over 110 Hz on a GPU or 75 Hz on a CPU. |

|

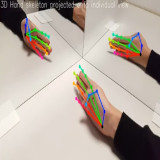

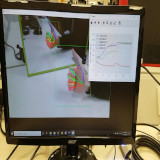

Guan Ming Lim, Prayook Jatesiktat, Christopher Wee Keong Kuah, Wei Tech Ang EMBC, 2020 project page / code / video A camera-based system for markerless hand pose estimation using a mirror-based multi-view setup to eliminate the complexity of synchronizing multiple cameras and reduce the issue of occlusion. |

|

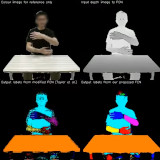

Guan Ming Lim, Prayook Jatesiktat, Christopher Wee Keong Kuah, Wei Tech Ang EMBC, 2019 project page / code / video Semantic segmentation of body parts (e.g. hand and arm) and objects from depth image. A Fully Convolutional Neural Network is trained on synthetic data with some level of generalization on real data. |

|

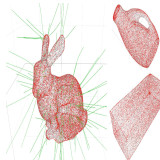

Prayook Jatesiktat, Ming Jeat Foo, Guan Ming Lim, Wei Tech Ang ICCS, 2018 supplementary material SDF-Net is a simple multilayer perceptron (with a memory footprint of < 10 kB) that is used to model the signed distance function (SDF) of a rigid object for real-time tracking (≈ 1.29 ms per frame on 1 CPU core) of the object using a single depth camera. |

|

|

|

|

Guan Ming Lim, Prayook Jatesiktat, Christopher Wee Keong Kuah, Wei Tech Ang CAREhab, Singapore Rehabilitation Conference, 2020 |

|

Design and source code from Jon Barron's website |